September 10th, 2008 .

Some of you may be aware of the great work done so far by Raul Fernandez Hernandez (“farsthary“) concerning volume rendering in Blender. For those not in the know, rendering volumes of participating media as opposed to solid surfaces, has many uses such as realistically rendering smoke, fire, clouds or fog, or even with more complicated techniques, rendering volume density data such as MRI scans for medical visfualisation.

Raul completed a fair bit already, implementing this in Blender. Living in Cuba, where he doesn’t enjoy the same kind of connectivity as in many other parts of the world, it’s been difficult for him to participate in development and share his work. A few weeks ago, he emailed me asking for help with his code, and with maintaining it publicly. I promised I’d at least have a look at the first patch he was able to release and see what I thought.

In some spare time, I had a go at taking apart his patch. Unfortunately, it became clear that it wasn’t suitable for immediate inclusion. It was Raul’s first time coding in Blender (or perhaps in 3D) and due to this, wasn’t aware of some of the techniques available to make his life easier, or of how to integrate the code as cleanly as it could or should be. On top of this, working alone without being able to easily communicate with other developers, he’d been working hard on adding more and more features and options on top of a structure which I think could be done differently and a lot cleaner at a fundamental level. This led to some quite complicated code, and a tricky UI for artists to interact with.

I proposed to Raul that I start again from scratch, simpler and more cleanly integrated and then merge in what I could later down the track, step by step, to which he was very enthusiastic. He excitedly agreed with my invitation to work closely on integrating things like the simulation work he’s been doing, once a new structure is well defined. Recently in a few days off from work I sat down and started fresh, based on the approach in Physically Based Rendering.

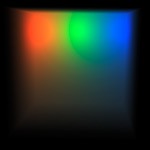

Spot light in constant density volume |

3 point lights in constant density volume |

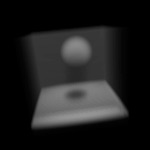

Textured spot light in constant density volume |

So far I’ve got it working reasonably well with about 90% new code. Unlike before, it’s following a physically based approach, with a region of constant or variable density, calculating light emission, absorption, and single scattering from lamps. My aim is to get a simple, easier to use version ready, optimised for practical rendering tasks like smoke, fire or clouds. Once this is working perfectly, integrated cleanly and completely, and working in Blender SVN, further down the track we can think about adding some of the less common capabilities like isosurfaces or volume data sets.

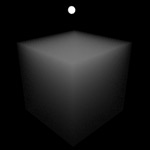

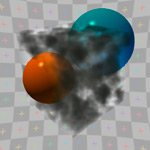

Cloud texture controlling density, lighting from volume emission only |

Cloud texture controlling density, 3 lights with single scattering |

Spherical blend textures controlling density, directional light with single scattering |

There’s still a lot to do – there’s one remaining bug with single scattering I must try and track down, and then there’s a lot of integration work to do, calculating alpha, rendering volumes in front of solid surfaces etc. Before too long I’ll commit this to the newly created ‘sim/physics’ SVN branch and work on it in there. I don’t know how long it will be before Raul is able to start working on this again too, since he’s under some horrible circumstances after hurricane Gustav ravaged Cuba. At least in the near term, I’ll be able to continue poking away at this in SVN.

Some things on my want-to-do list include:

- Plenty of optimisations

- Adaptive sampling – step size and/or early termination

- Alternative anisotropic phase (scattering) functions such as Mie or Henyey-Greenstein

- Wavelength (R/G/B) dependent absorption, so light gets tinted as it travels through a medium

- An optimised bounding box or sphere ‘volume region’ primitive, which could be much faster than raytracing meshes as is done now

- Multiple layers deep ray intersections, to enable concave meshes or volume meshes in front of volume meshes

- Rendering Blender’s particle systems, either by creating spherical ‘puffs’ at each particle as Afterburner or PyroCluster do, or perhaps by estimating particle density with a kd-tree or such

I hope to be able to provide some more updates on this before too long!

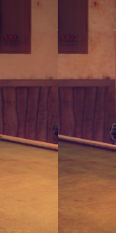

I wrote some code a little while ago that did it all automatically in the shading process, applying inverse gamma correction for colour textures before rendering, then corrected back to gamma 2.2 afterwards. After adjusting lights from old scenes to have the same appearance, it gave some nice results. It seemed to bring out a lot more detail in the textures, which got washed out before (left is normal, right is with linear workflow). It’s not finished though, it also needs to adjust the lights in the preview render, and inverse gamma correct colour swatches too so the flat colours you pick in the UI are also linear.

I wrote some code a little while ago that did it all automatically in the shading process, applying inverse gamma correction for colour textures before rendering, then corrected back to gamma 2.2 afterwards. After adjusting lights from old scenes to have the same appearance, it gave some nice results. It seemed to bring out a lot more detail in the textures, which got washed out before (left is normal, right is with linear workflow). It’s not finished though, it also needs to adjust the lights in the preview render, and inverse gamma correct colour swatches too so the flat colours you pick in the UI are also linear.