Today I finally got around to fixing Blender’s weird camera to more closely resemble real-world counterparts. Previously Blender’s camera was hardcoded to use a sensor aperture width of 32mm, which doesn’t reflect any commonly available real-world camera sensor/film size. In turn, this meant the focal length (mm) for the lens didn’t resemble any real-world lenses whatsoever. It was pretty misleading when trying to intuitively choose lenses based on photographic experience, and also makes it a lot harder to match live-action footage.

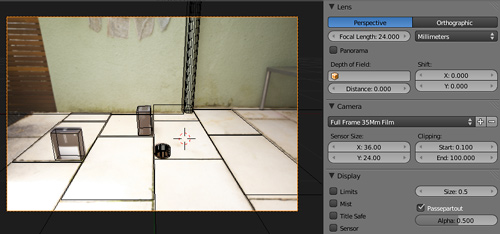

I’ve made a patch to allow specifying a sensor size, along with a handful of common presets – easy to add some more. This first version isn’t 100% correct in that it doesn’t modify the FOV due to focus (in reality, the extension of the lens elements due to focusing slightly alters the FOV), but this can be added pretty easily as an option later. It’s also deriving the apparent FOV purely based on the horizontal aperture width, options for vertical/diagonal may be possible to add later, but would need to check how the renderer works with varying frame resolution aspect ratios.

I thought it would be fun to do some real-world tests, so I quickly set up a very rough set in my backyard to see if a virtual set in Blender would match up. The setup and measurements were very very dodgy and I didn’t expect it to match perfectly. I shot with two cameras – first a 5D Mk II with a 12-24mm on 24mm and a 24-70mm on 70mm. To compound the un-scientific sketchiness of the test even further I tried again with my 6×6 Mamiya C330f TLR with 65mm and 80mm lenses, using a compact digital camera shooting the ground glass focusing screen (yes, too cheap to spend film on this). Given all this, I was pretty surprised to see that with a bit of tweaking it looks like it’s doing the right thing.

Anyway, the patch is up on the blender patch tracker, would be good to get some testing first before trying to commit, especially since I’m not sure how it should interact with the game engine either.